Scent as Perception:Machine Smell as a Sensory Intelligence for Image Generation

Author: Yiyi Zhang

Supervisor: Professor Mick Grierson

Degree Programme:

MSc Computing and Creative Industry (Modular)

Date of Submission: December 2025

Table of Contents

Part I

Research Context and Aims

Part II

System Design and Technical Development

Part III

Outcomes, Reflection, and Conclusion

Introduction

Research Questions

Project Description

Related Background and Theory

E-nose System Architecture

Feature Engineering & Machine

Learning Models

Development Process & Challenges

Outcomes

Reflection Question

Conclusion

Core Output:

An interactive device that transforms real-time odor signals into generative visual compositions.

Research Objective:

To explore how machine olfaction can be applied as a form of sensory intelligence in human-computer interaction (HCI) and computational creativity.

Introduction

"If a machine could smell, how would it imagine what it perceives?"

Core Project Inquiry

Research Questions

Data & Translation

How can we reliably collect and classify data from an electronic nose?

How can identified scents be transformed into visual outputs (cross-modal translation)?

Experience & Art

How can scent create a more immersive, multi-sensory environment?

What new meanings emerge when scent becomes a driver of computational creativity?

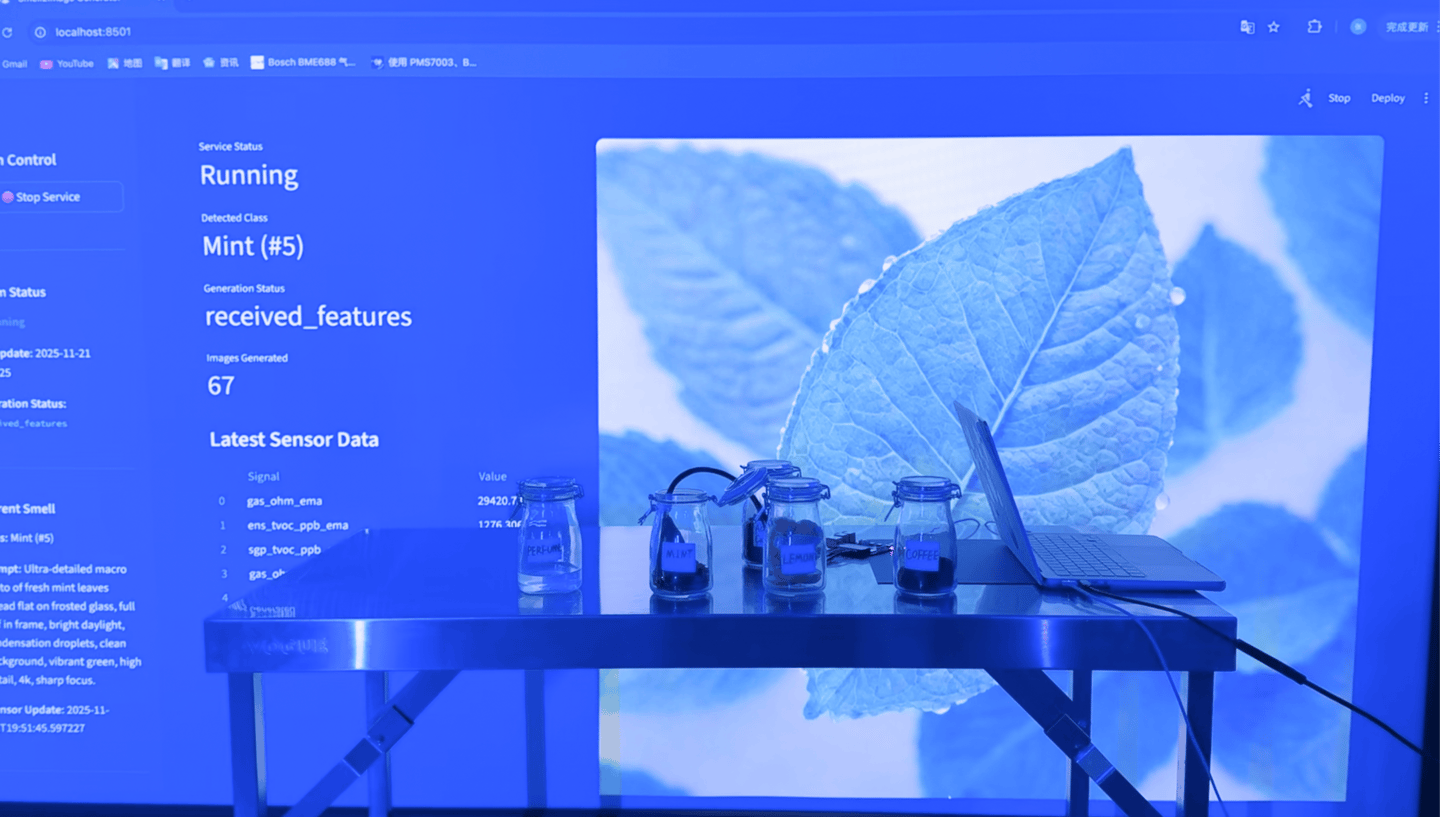

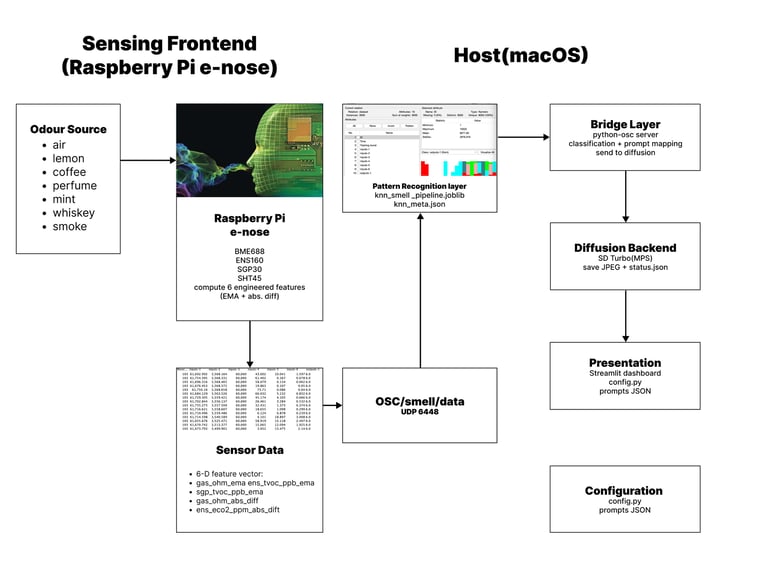

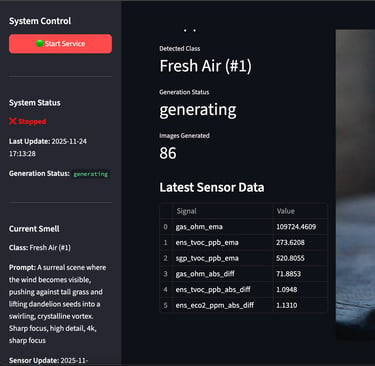

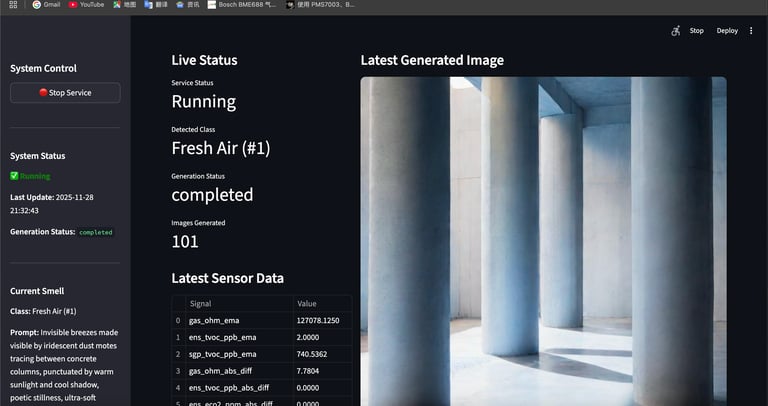

End-to-End Pipline

Hardware Layer

The E-Nose

Raspberry Pi + BME688/ENS160/SGP30/SHT45 sensors detecting gas composition.

Classification

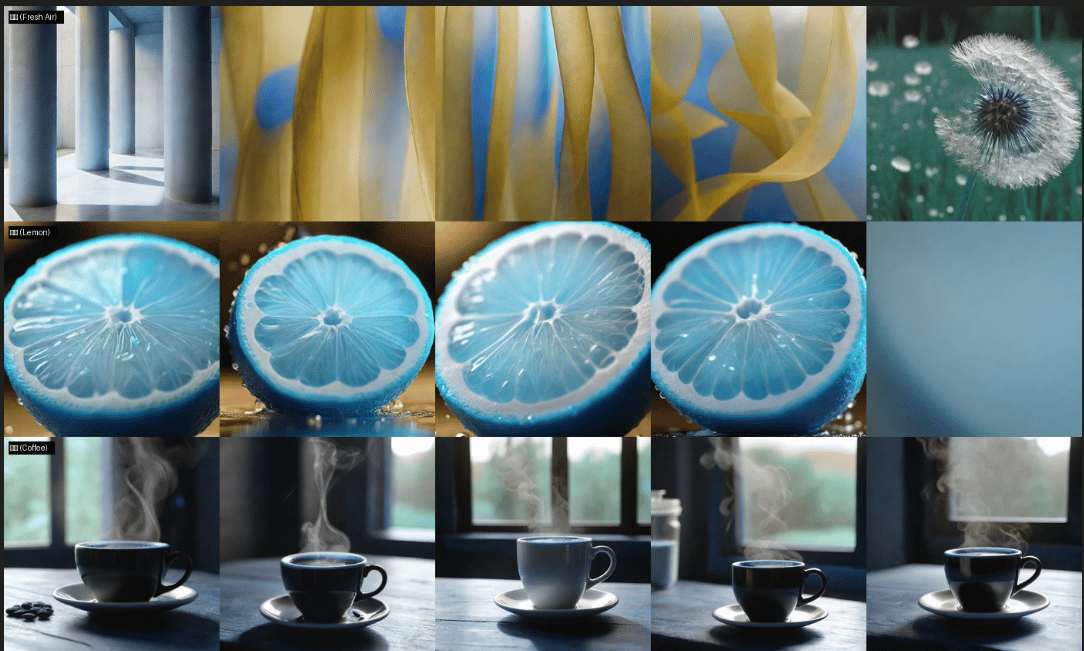

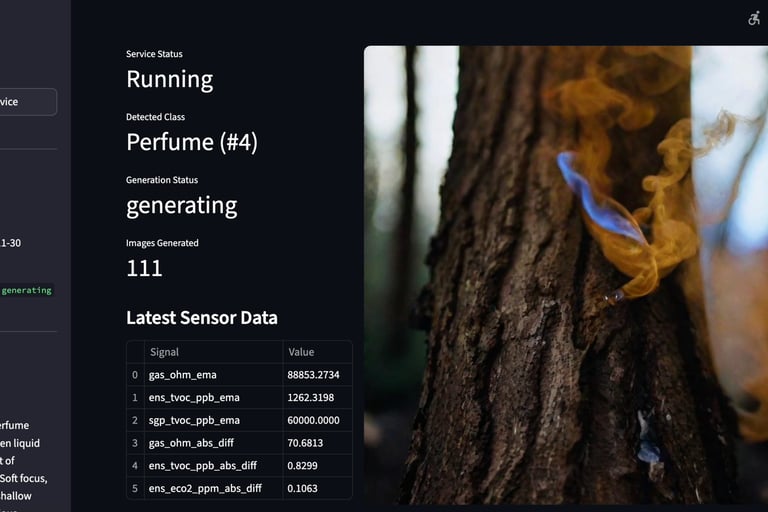

KNN model classifying 7 unique scent categories from raw data.

Visual Output

Stable Diffusion Turbo evolving artwork based on scent.

ML Layer

Generative Layer

Project Description

The Role of Smell in Human-Computer Interaction (HCI)

Current Status:

olfaction remains largely unexplored.

Transformation:

The development of machine olfaction and low-cost gas sensors.

Creative Basis: This project continues my previous installation, Unfading Fragrance, further pushing "odor itself" to the core of the system.

Related Background and Theories

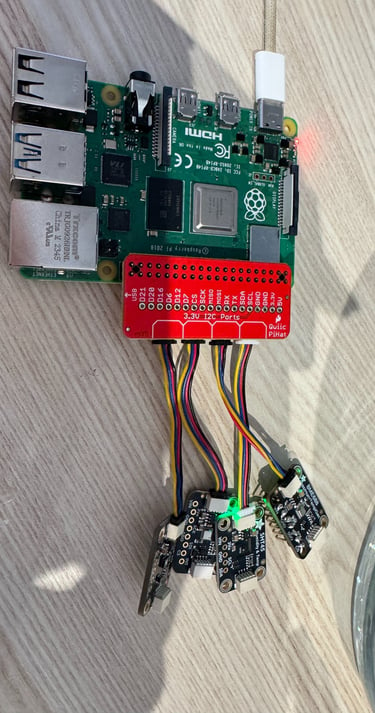

E-nose System Architecture

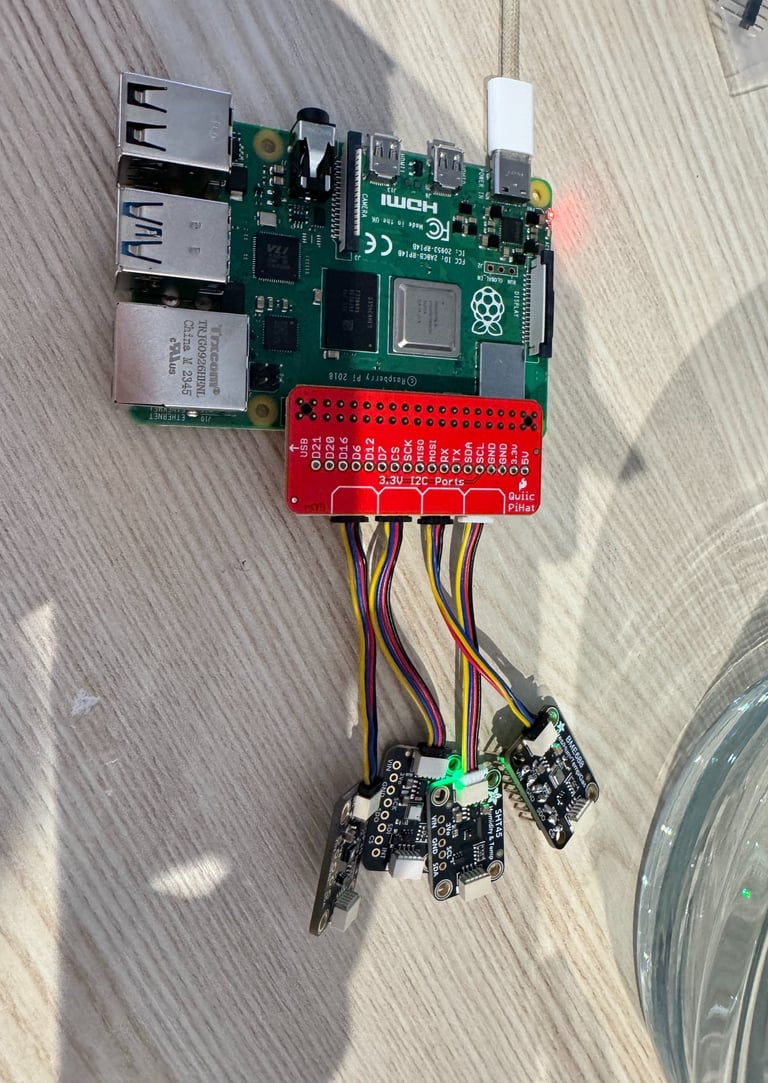

Hardware System:

The Raspberry Pi connects to the following sensors:

BME688: Gas resistance and environmental data

ENS160: TVOC and eCO₂

SGP30: TVOC and eCO₂

SHT45: For precise temperature and humidity compensation

Controlled Sampling Chamber Design:

Ensures stable temperature and humidity, consistent odor concentration, and avoids contamination and cross-diffusion.

Experimental Tools:

Uses labeled beakers, samples, activated carbon (for baseline recovery), and strict operating procedures.

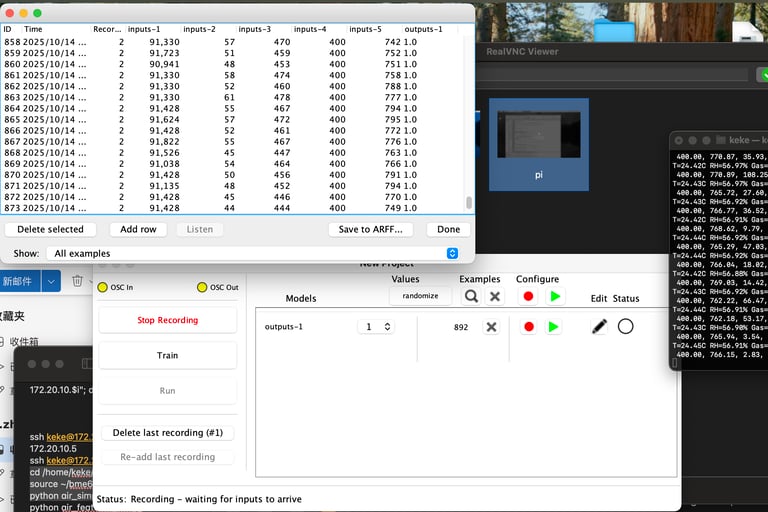

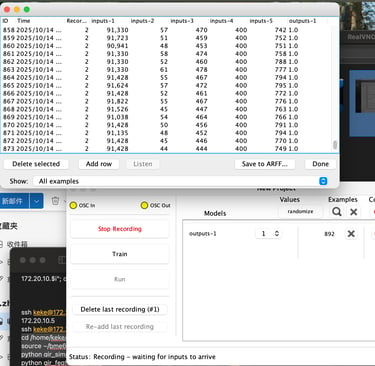

Feature Engineering & Machine Learning Models

Feature Pipeline

Raw sensor data transformed into 6 engineered features:

• 3x SoftEMA: To smooth signal fluctuations.

• 3x Absolute Differences: To capture short-term dynamic changes.

Result: Improved separability between 7 scent categories.

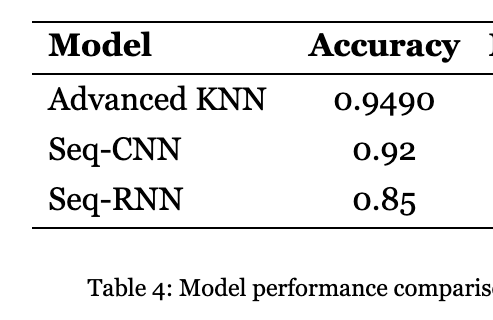

Model Selection: KNN

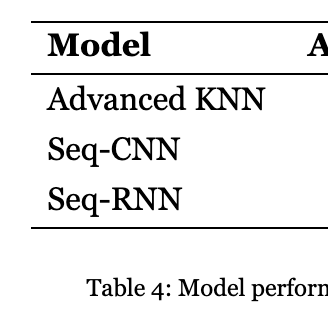

Selected K-Nearest Neighbors after testing against CNNs/RNNs.

• Accuracy: ~ 94% (5-fold cross-validation).

• Speed: Low latency for real-time interaction.

• Simplicity: Interpretable distances.

Challenges

Environmental Instability(Humidity/Temp drift)

Inconsistent Concentration

Sensor Saturation(Alcohol-based scents)

Diffusion Model Latency(20–100 seconds)

Development Process & Challenges

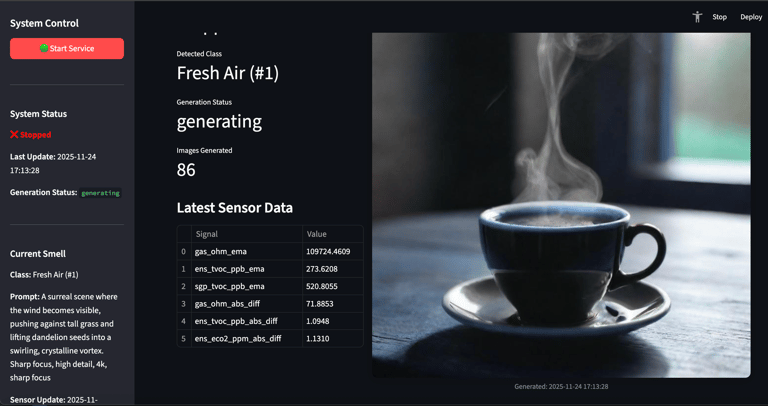

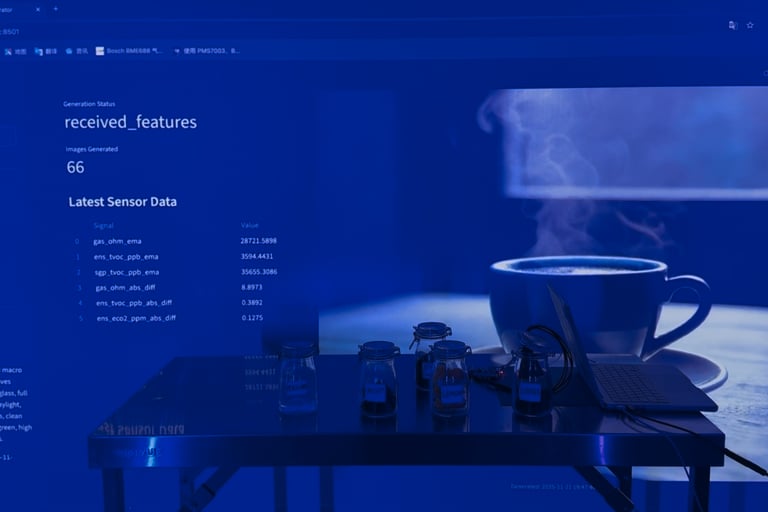

The system successfully achieved stable responses across 7 scent categories.

Cross-Modal Translation: Turning olfactory data into coherent visual generative art.

Contribution: Demonstrating how low-cost olfactory sensing can integrate into Generative AI. This project expands the design space for multi-sensory computing and offers methodological insights for future olfactory-driven creative systems.

Outcome

Generate presentation

Reflection Question

Why KNN?

The Methodological Choice

Why choose a simple algorithm like KNN over complex Deep Learning models?

My project shows that smell can be used as a meaningful input for generative AI.

Machine olfaction enables new forms of multi-sensory creativity.

The system demonstrates how computational tools can reinterpret the fleeting nature of smell into visual forms.

These visual outputs allow audiences to engage with and reflect on olfactory experiences in new ways.